The AI Bottleneck Investors Keep Misdiagnosing

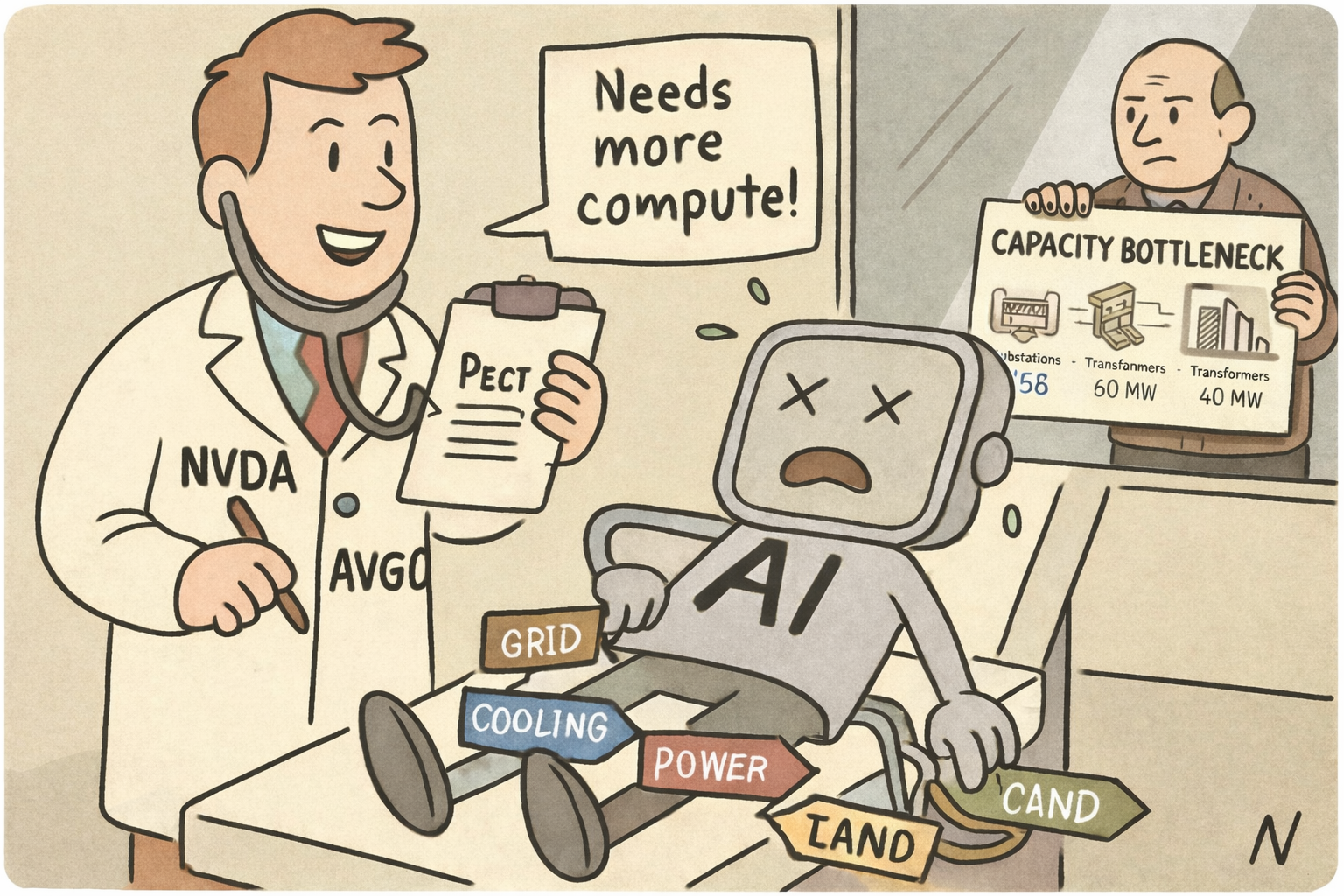

The real limit on AI isn’t compute — it’s capacity.

There’s a moment in every AI conversation where someone — usually a CEO — leans back, exhales, and says the same line:

“We need more compute.”

It sounds urgent. It sounds technical. It sounds like a GPU problem.

And for a while, I believed that too.

But the more I mapped the AI pipeline — chips, networking, optics, power, cooling, grid — the more something started to ache. Not because the system was complicated, but because the conversation was incomplete.

We weren’t asking the right question.

The First Bottleneck: The One Everyone Can See

When Jonathan Ross from Groq says “we need more compute,” he’s talking about the bottleneck engineers love:

not enough GPUs

not enough networking throughput

not enough optical bandwidth

not enough accelerators

This bottleneck is fast, visible, and solvable. Throw money at it, and it moves.

This is why investors sprint toward:

NVDA

AVGO

ANET

CIEN / LITE / COHR

It feels like the center of the story because it moves at the speed of ambition.

But that’s not the ache.

The Ache: The Bottleneck That Doesn’t Move

The ache shows up when you zoom out.

Because after you solve the engineering bottleneck, you hit the one that doesn’t bend:

power

cooling

substations

transformers

land

permitting

the grid

This bottleneck doesn’t care about capex or innovation cycles. It doesn’t respond to urgency. It doesn’t scale with demand.

It defines the ceiling of the entire AI ecosystem.

And almost no one wants to talk about it.

Because it’s slow. Because it’s physical. Because it’s regulated. Because it’s inconvenient.

But it’s also the truth.

The Two‑Phase Reality Investors Keep Missing

The AI boom isn’t one bottleneck. It’s two — and they arrive in sequence.

Phase 1 — The Technical Bottleneck → GPUs, networking, optics → fast, exciting, solvable → investors pile in early

Phase 2 — The Physical Bottleneck → power, cooling, grid → slow, structural, immovable → investors arrive late

The ache is that Phase 2 is the one that actually decides how big AI can get — not Phase 1.

But because Phase 1 moves faster, it steals the spotlight.

The Misdiagnosis That Shapes the Market

When CEOs say “we need more compute,” most investors hear:

“Buy more chips.”

But the diagnostic question is:

Is this a performance bottleneck or a capacity bottleneck?

If it’s performance → engineering will fix it.

If it’s capacity → physics and regulation will decide the timeline.

This single distinction explains:

why ANET and AVGO run early

why VRT and utilities run late

why optical networking is exploding

why grid modernization is inevitable

why the AI boom is already hitting its second wall

The ache is realizing that the bottleneck investors obsess over is not the one that defines the future.

The Real Story: The Ceiling Is Not Where People Think It Is

The AI ecosystem won’t be limited by:

GPU supply

networking throughput

optical bandwidth

Those are solvable.

It will be limited by:

megawatts

substations

transformers

cooling

land

regulation

The bottleneck that matters most is the one that moves slowest.

And that’s the part of the story investors still underestimate.

Final Line

If you want to understand where AI is going, stop listening to the bottleneck that screams the loudest. Start listening to the one that refuses to move.