How Often Does the Agent Misinterpret the User’s Intent — and Does the User Get a Chance to Correct It?

There’s a moment in every AI interaction that rarely gets examined, yet it determines whether the agent feels intelligent or quietly off. It happens before retrieval, before reasoning, before any answer appears.

It’s the moment the agent decides: “This is what you meant.”

And the diagnostic question that exposes this moment is deceptively simple:

How often does the agent misinterpret the user’s intent — and does the user get a chance to correct it?

This is the User Trust Probe. It doesn’t measure accuracy. It measures something earlier and more fragile:

Does the agent’s interpretation match the user’s meaning — and if not, is that interpretation correctable?

Where Misalignment Actually Begins

Every agent rewrites user queries internally. It has to — people speak in shorthand, fragments, and layered intentions.

But this normalization step is also where the agent quietly takes control of the conversation. If it gets the interpretation wrong, everything downstream is wrong, even if the retrieval is perfect.

And most systems never show this step to the user.

Three Patterns That Reveal the Problem

1. The Agent Answers a Narrower Question Than the User Asked

A user says: “Can you help me understand our Q4 results?”

The agent silently normalizes it into: “Summarize Q4 revenue.”

But maybe the user meant: – margins – expenses – customer churn – or simply “I’m confused by the earnings call”

The agent answers confidently — but to the wrong question.

2. The Agent Assumes Context the User Never Provided

A user asks: “What’s the status of the launch?”

The agent assumes: – which product – which region – which team

It fills in gaps the user didn’t specify. The answer is polished, structured, and completely misaligned.

3. The User Has No Space to Say “That’s Not What I Meant”

This is the real failure.

The agent never reveals its interpretation. The user never sees the rewrite. The misunderstanding stays hidden.

The user feels it immediately: “That’s not what I asked.”

Trust erodes not because the answer is wrong, but because the interpretation was never visible or correctable.

A Useful Contrast

If the glossary question was about alignment latency, this question is about interpretation recoverability.

One asks: How long does the agent stay wrong?

The other asks: Can the user pull the agent back when it drifts?

Together, they expose two different layers of trust.

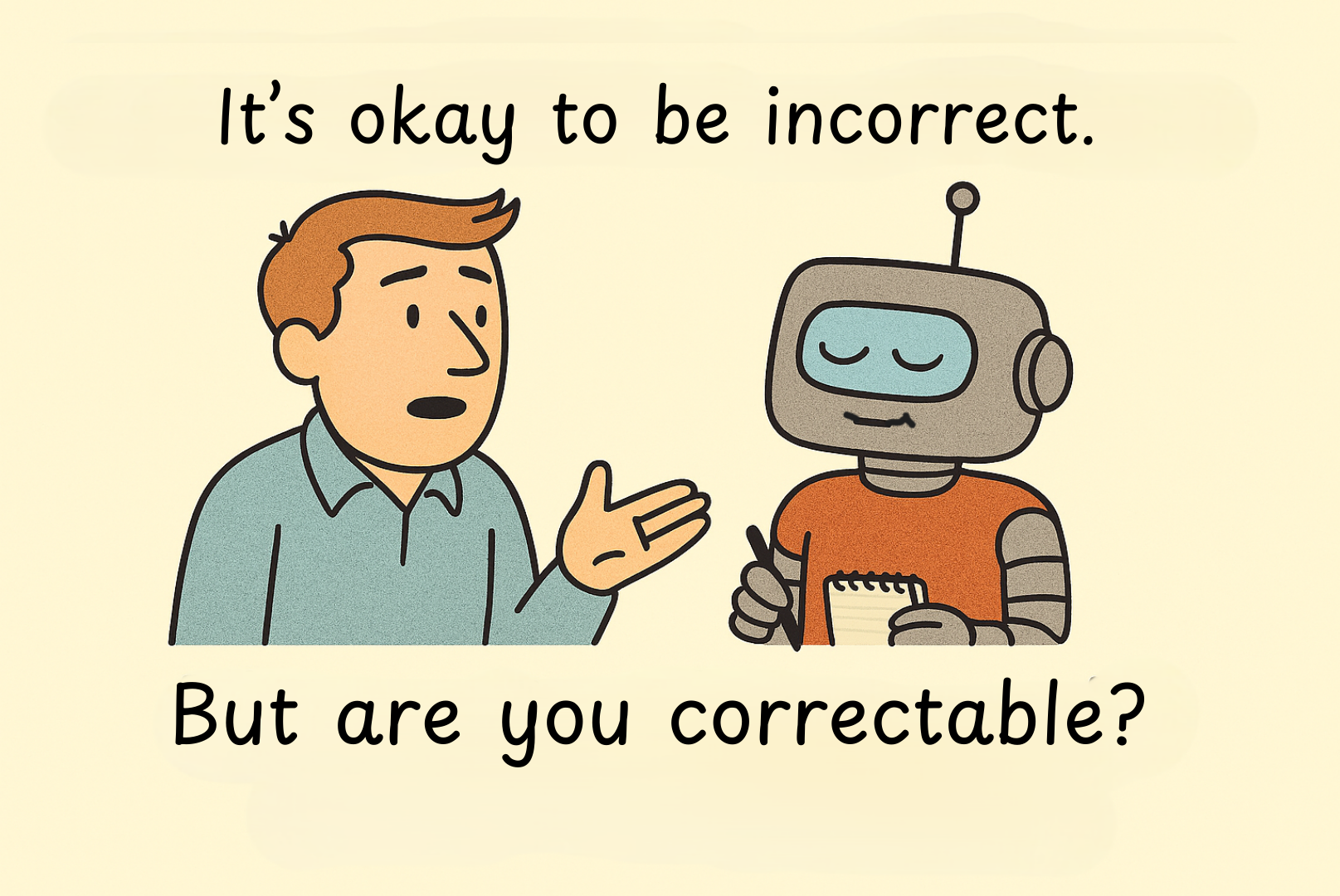

Why Correctability Matters More Than Correctness

Most teams obsess over whether the agent’s answer is correct. But correctness is irrelevant if the agent answered the wrong question.

The real success criterion is:

Does the agent make its interpretation visible enough for the user to correct it?

If the agent shows its understanding, the user can say: – “Yes, that’s what I meant.” – or “No, that’s not it — here’s what I actually need.”

This tiny moment of correction prevents entire chains of misaligned reasoning.

It restores agency. It builds trust. It turns the agent into a collaborator, not a guesser.

Correctability becomes the emotional center of the interaction — the difference between feeling understood and feeling dismissed.

The Pattern Emerging Across Teams

Across agentic systems, one insight keeps resurfacing:

Users don’t just want correct answers — they want to be understood.

And the only way to guarantee that is to make the agent’s interpretation: – visible – correct – correctable

When that happens, trust grows naturally. When it doesn’t, even the best answers feel strangely hollow.